Закончил

недавно курс:

Data Manipulation at

Scale: Systems and Algorithms

University of

Washington

Ощущения

двойственные: вроде и знаний прибавилось,

но как-то неадекватно потраченному

времени (а времени жалко).

Подавляющее

количество лекционного времени занято

поверхностными рассказами о том,

что происходит

вокруг Data Sciense и, собственно, что это

такое. С уклоном в Биг Дата.

Не знаю, на

какую целевую аудиторию рассчитан курс,

на бухгалтеров с начальными знаниями

в СУБД и программировании?

Которые хотят

стать аналитиками? Мне, с моим бэкграундом

программиста, было откровенно скучно

90% времени.

А когда скучно

не было, настойчиво хотелось более

углубленного изучения материала.

Задачки тоже

ерундовые, даже думать особо не надо.

В целом: очень

поверхностный, вводный курс в современный

анализ данных.

Silly bus

Data Science Context

and Concepts:

– Examples and the

Diversity of Data Science

– Working Definitions

of Data Science

– Characterizing this

Course

– Related Topics

– Course Logistics

Assignment: Twitter

Sentiment Analysis

Relational Databases

and the Relational Algebra:

– Principles of Data

Manipulation and Management

– Relational Algebra

– SQL for Data

Science

– Key Principles of

Relational Databases

Assignment: SQL

MapReduce and Parallel

Dataflow Programming:

– Reasoning about

Scale

– The MapReduce

Programming Model

– Algorithms in

MapReduce

– Parallel Databases

vs. MapReduce

Assignment: MapReduce

NoSQL: Systems and

Concepts:

– What problems do

NoSQL systems aim to solve?

– Early key-value

systems and key concepts

– Document Stores and

Extensible Record Stores

– Extended NoSQL

Systems

– Pig: Programming

with Relational Algebra

– Pig Analytics

– Spark

Graph Analytics:

– Structural Tasks

– Traversal Tasks

– Pattern Matching

Tasks and Graph Query

– Recursive Queries

– Representations and

Algorithms

Очень интересные

темы, но, увы, галопом-по-европам.

Дайджест

Data Science Context and Concepts

Data

Science: intersect(Programming, Math/Statistics, Substantive

expertise)

Simple methods + lots

of not-so-good data vs sophisticated methods + not-so-many good and

clean data.

First approach wins.

Describing/quantifying

uncertainty making a statistical argument;

Conveying the results

to the public:

critical to the success

of the effort, and it's far from trivial.

Collecting data from

web, etc; process data; make revealing presentations:

it's data science.

Graph analytics,

databases, visualisation, large datasets, adhoc interactive analysis,

repurposing:

what data scientist do.

Data scientist: find

nuggets of truth in data and then explain it to the buisness leaders.

Strong mathematical

background.

Skills: statistics,

data munging, visualisation.

80% of analytics is

sums and averages.

Concerned with the

collection, preparation, analysis, visualisation, management and

preservation of large collections of information.

Tasks:

– 80% of the work:

preparing to run a model (data gathering, cleaning, integrating,

restructuring, transforming, loading, filtering, deleting, combining,

merging, verifying, extracting, shaping, massaging)

– running the model

– other 80% of the

work: interpreting the results, communicating the results

Data products:

data-driven apps (spellchecker, translator, ...)

If you're a DBA, you

need to learn to deal with unstructured data;

If you're a

statistician, learn to deal with big data.

if you're a software

engineer, learn statistical modeling and communicating results.

If you're a business

analyst, learn algorithms and tradeoffs at scale.

Abstractions: matrices,

linear algebra, relations + relation algebra.

Barrier: Incompatible

data formats, non-aligned data, inconsistent data semantics.

90% of time for

'handling' data (not doing science).

Working with large and

heterogeneous datasets requires significant programming chops.

Science: empirical →

theoretical → computational → eScience (massive datasets).

Sometimes possible only

one-pass through dataset.

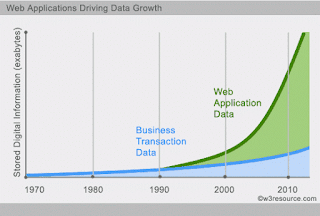

Data: Volume, Variety,

Velocity, (Veracity, ...)

Big Data: hard data (1G

social graph, build a recommender system; 10K spreadsheets to

integrate).

– data exhaust from

customers; new sensors; ability to keep everything

Assignment:

– sentiment of each

tweet: sum(sentiments for each word)

– sentiment of new

word: numPositiveTweets — numNegativeTweets

– word frequency:

wordCount / numWords

– happiest state:

tweetsForState; max(sumSentiment / numTweets)

– top ten hash tags:

sort(tagCount / totalTags)

Куски кода

выложу отельным постом.

Relational Algebra (algebra of tables):

– applicable to Big

Data

– well known

– abstracted /

optimized query plans

– SQL to express

queries, operations

RA expression does

specify order of operations, SQL does not.

SQL: declarative

language.

Data model is about:

– structures

(rows/columns, key-value pairs),

– constraints (all

rows must have the same number of columns, ...),

– operations (get

next n bytes, find the rows where column 'a' is 'b').

Database: a collection

of information organized to afford efficient retrieval.

What problem do they

solve:

– sharing (concurrent

access by multiple readers/writers)

– data model

enforcement (data clean, organized)

– scale (work with

datasets larger than RAM)

– flexibility (use

the data in new, unanticipated ways)

Questions:

– how is the data

physically organized on disk

– what kinds of

queries are efficiently supported

– how hard is it

update/add the data

– what happens when I

encounter new queries? Do I reorganize the data?

Relational DBMS were

invented to let you use one set of data in multiple ways, including

unforeseen

Algebraic structure of

the data, allowing reasoning and manipulating independently of

physical data representation.

Relational algebra

operations (closure: operation return relation):

– set operations

(union [all], intersection-join, difference-except)

– selection

(condition where ...)

– projection (select

[distinct] a, b, ...)

– join (cross

[cartesian] product, left/right/inner/outer join, theta join)

and extended (bag) ops

– duplicate

elimination (distinct)

– grouping,

aggregating (group by, sum, count, ...)

– sorting (order by)

Every paper will assume

set semantics; every implementation – bag semantics.

Query optimization:

apply laws/rules and find less expensive equivalent

– like arithmetic

((z*2)+((z*3)+0))/1 = (2+3)*z

– same logical

expression → different physical algorithms (declarative query

language)

– explain … mapping

SQL to RA

UDF: user defined

functions:

– scalar

– aggregate

– table

Views: logical data

independence (just a query with a name)

– abstraction and

reuse

Indexes: for searching

needle in a haystack.

SQL assignment:

Сниппеты кода

выложу отдельным постом.

MapReduce, parallel dataflow

Shared nothing vs

– shared memory

(multicore PC)

– shared disc

(Oracle, …)

only Shared Nothing can

be scaled out to 1000s of nodes.

Implementation:

– master node

– M splits — read

to M map tasks, write to R regions – read to R reduce tasks

– DFS, fault

tolerant, files by blocks, block size 64 mb

– YARN, task

scheduler, fault tolerant

Scalable:

– can make use of

1000s of cheap computers

– for N data items

you must do no more than (N^m)/k operations

– scale up vs scale

out

MapReduce:

– map (inkey,

invalue) => [(outkey, outvalue)], eg: (docname, listofwords) =>

[(word, frequence)]

– shuffle,

group/aggregate outkeys

– reduce (outkey,

[outvalues]) => [resvalues], eg: (word, [frequence]) => (word,

totalfrequence)

– work with tuples,

bags of tuples

– think backward:

what result you have to produce, from what reduce input you can do

it, what you have to do in map phase to generate reduce input.

Inverted index: «eat»:

(tweet1, tweet3); «pancakes»: (tweet3, tweet2)

– map to (word,

tweetnum)

– reduce input is the

result.

Relational join in MR:

– lump two datasets

into one

– map to (joinkey,

datarow)

– reduce to (joinkey,

tab1row, tab2row)

Counting friends in MR:

– record ('Jim',

'Sue') is an edge from digraph

– counting like in

'word count' example, key is a person name

Matrix multiplication

in MR:

– reduce output must

be in form ((i, j), val) → (i,j) is a key

– mapper have to emit

each cell from A numBcols times and so on

Parallel databases vs

MapReduce

– MR: cheap scale

out, fault tolerance, easy to deploy

– PD: schema, low

latency, indexes, consistency (transactions/ACID), SQL, but very

expensive

– SQL, indexes,

schemas, low latency, algebraic optimisation emerging in MR world

(Pig, Hive, Impala, Spark, ...)

– relational algebra

everywhere

For data fitted to RDB

(not so big), RDBMS may be faster than MR

– MR load data faster

– RDBMS process data

faster (preprocessing done in loading phase)

MapReduce

assignment:

Сниппеты кода

выложу отдельным постом.

NoSQL: key-value, extensible record, document DB

NoSQL systems are purely about scale rather than analytics, and are

arguably less relevant for the practicing data scientist.

Joins, language/algebra

barely present in NoSQL bases:

– CouchDB (no

language)

– Pig, Hive, Spark,

Impala

It's a very small

fraction of all available databases. Scale out for write, read –

all about it.

NoSQL features:

– scale horizontally

– replicate and

partition data over many nodes

– simple API – no

query language

– weak concurrency

model (vs ACID transactions)

– efficient use of

distributed indexes, RAM data storage

– dynamically add new

attributes to data records

ACID:

– atomicity

– consistency

(written data must be valid according to all defined rules)

– isolation (often

relaxed)

– durability

Relaxing consistency

guarantees: CAP theorem

– consistency,

availability, partitioning – choose 2 of 3

– w/o partitioning no

scalability => AP, no C

– big scale, frequent

failures => transactions can't be finished fast enough

– eventually

consistent data.

Consistency:

– do all applications

see all the same data?

Availability:

– can I interact with

the system in the presence of failures?

Partitioning:

– if two sections of

your system cannot talk to each other, can they make forward

progress?

– If not => no

Availability

– If yes => no

Consistency

Conventional databases

assume no partitioning.

NoSQL systems may

sacrifice consistency.

Paxos protocol for

solving consensus:

– distributed voting

scheme

MVCC: multi-version

concurrency control:

– creates new version

on write

– checks timestamps

to determine which version to read

Major impact systems:

– Memcached:

in-memory indexes can be highly scalable (consistent hashing/hash

ring)

– Dynamo: idea of

using eventual consistency as a way to achieve higher availability

and scalability

(SLA: respond within

300 ms for 99.9% requests at the 99th percentile); vector clocks

– BigTable:

persistent record storage could be scaled to 1000s of nodes

BigTable/Hbase:

– sparse,

distributed, persistent multi-dimentional sorted map

– record is: (row,

col, time): string

– data sorted by row

key

– row key range

broken into tablets (tablet: unit of distribution)

– column families

(family is unit of access control, mem.accounting, disc accounting)

– write through

memtable, SSTables (disc chunks) are immutable

RA and declarative

query languages comes to MapReduce:

– Hive

– Pig

Take joins. To make a

join system can use different methods/strategies (diff. query plans).

System should pick the

right one, not programmer => we need declarative query language

with optimizer to make RA plan.

Enterprise needs:

– ACID

– declarative query

language

– schema/standards

Pig: almost SQL on top

of Hadoop (MapReduce), nested types:

– data model: atom,

tuple, bag, dict (map)

– operators: load,

filter, group/cogroup (join), distinct, foreach, flatten, store,

union, cross, order, ...

Spark: iterative tasks,

cached RDD:

– RDD: distributed

collection of key-value pairs

– transformations →

actions

– lazy evaluating

(optimization)

– operations: filter,

group, sort, union, cross, … (RA?)

Graphs: Structural tasks

Graph-structured data are increasingly common in data science

contexts due to their ubiquity in modeling the communication between

entities

graph:

– set of vertices V

– set of edges E

– edge is a pair (v,

w), directed, undirected, weighted, capacitated, loaded with data

– structural

algorithms, traversal, pattern-matching

Structural:

– in-degree,

out-degree

– degree histogram:

for d = 0..maxOutDegree find n = count(vertices.outdegree == d)

– random graph have

exponential distribution: n(d) = (c*x^d), x < 1 (most vertices

have small outdegree)

– human generated

graphs have zipf distribution: n(d) = 1/d^x, x > 0 (long tail,

most vertices have significant outdegree)

– connectivity

coefficient: min number of vertices you need to remove that will

disconnect the graph

– centrality:

closeness centrality of

a vertex: average length of all its shortest paths;

betweeness centrality

of v: the fraction of all shortest paths that pass through v

Traversal tasks:

– PageRank

(eigenvector centrality): propagate node rank to connected nodes,

divide to outgoing links, mitigate by damping factor

– minimum spanning

tree (smallest subset of edges)

– Euler's path: visit

every vertex crossing each edge once, easy

– Hamiltonian path:

visit every vertex once, NP-complete

– maxflow/mincut

PageRank in details:

– formula: PRv = (1 -

damp) / len(Vertices) + damp * sumPointingToV( PR(wi) / wi.outdegree)

– meaning:

probability of stepping into vertex walking randomly

Graph: Pattern matching tasks

– pattern example:

two vertices connected to each other directly. Vertices and edges may

be labeled.

Popular pattern:

triangle A → B → C → A, a cycle with 3 vertices.

– brute force: for

each edge (v, w) check every vertex u: if (v, u) and (w, u) is edges

=> triangle.

RDF – resource

description framework (subject, object, predicate):

– given a graph with

edge labels

– pattern match

example: A interferes with B regulates C associated with D

– need some kind of

pattern expression language

– RDF: resource

description framework, triples (subject, predicate, object)

– triple is just a

labeled edge in a graph

Relation algebra,

Datalog for graphs

– SQL query for

pattern:

select i.subject from R

i, R r, R a where i.predicate = 'interferes with' and

r.predicate =

'regulates' and a predicate = 'associated with' and

i.object = r.subject

and r.object = a.subject

– Datalog query:

Ans(x) :-

R(x, interferes witn,

y), R(y, regulates, z), R(z, associates with, w)

Another example:

– relations:

InterferesWith(drug1, drug2); Regulates(drug, gene);

AssociatedWith(gene, disease);

– SQL: select i.drug1

from InterferesWith i, Regulates r, AssociatedWith a

where i.drug2 = r.drug

and r.gene = a.gene

– Datalog: Ans(x) :-

InterferesWith(x, y),

Regulates(y, z), AssociatedWith(z, w)

Recursion

knew(Person2) :-

knew(Person1) email(Person1, Person2, Message, Time), Time < 'June

3'

– sort of BFS

algorithm, loop ang queue

– beware: cycles in

the graph

– MapReduce, Spark:

loop, cache invariants

– in graphs with ZIPF

distribution (long tail) you have to break looping: insignificant

data going and going but you get all you need already

Pregel, Giraph, Hama:

– PageRank in

MapReduce have problems: all graph data shuffled on every iteration;

we need to shuffle only

new rank contributions; iterations controlled outside of MR.

– Pregel: batch

algorithms on large graphs

while any vertex is

active or max iterations not reached:

for each vertex: #

parallelized

process messages from

neighbors from prev.iteration

send messages to

neighbors

set active flag

Data representation

top 5 highest in-degree

vertices: select top 5 w, incount from (

select w, count(v) as

incount from edges group by w

) order by incount

– adjacency list:

edges(v, [w, …]); good for MR

– adjacency matrix:

good for finding specific edge

Different algorithms,

different properties.

original post http://vasnake.blogspot.com/2016/02/data-manipulation-at-scale-systems-and.html

Excellent article. Very interesting to read. I really love to read such a nice article. Thanks! keep rocking.Big Data Hadoop Online Training Bangalore

ОтветитьУдалить